Exponential AI Needs Crypto

Why open-source and decentralisation are essential for humanity's AI future

Here’s an incredible analogy I came across:

Generative AI is the discovery of a new continent on Earth with 100 billion superintelligent people willing to work for free.

Mindblowing, isn't it?

The 21st century will be known as humanity’s Artificial Intelligence era.

We have a front-row seat to the early days of a generational technology that will transform society more profoundly than the discovery of electricity, the splitting of the atom, or even the harnessing of fire. Don’t take it from me — the King of England says so:

What a time to be alive! Who knew that feeding an algorithm vast amounts of data and layering on huge compute resources would enable AI to develop surprising new abilities? It can now synthesise, reason, and have actual conversations with us. It enables us to interact with all human knowledge in a natural, intuitive language.

As Marc Andressen succinctly outlines, AI will save the world. I’m on his team.

The Technology Paradigm Change

Crypto and AI represent this decade's two most significant paradigm shifts in technology.

Paradigm shifts are innovations that:

Fundamentally alter the way we operate and think about the world

Are broadly applicable across all sectors and industries

Unlock a new level of productivity for humanity.

I get excited about transformative advances — not the latest viral social media app. AI and crypto are evolving along their unique paths, but I expect both to converge. They are complementary:

AI = data, compute, autonomous agents

Crypto = ownership, economic alignment & coordination, censorship resistance

Balaji says lets tokenize everything. Geddit?

But behind his half-jest, there is a groundbreaking truth. When the two forces for crypto and AI merge, something extraordinary unfolds. Crypto serves as the natural coordination layer for the AI stack, revolutionizing how we interact with technology and each other.

Open source ≠ Decentralisation

It irks me that the terms ‘open source’ and ‘decentralisation’ are conflated and often used interchangeably. When I talk to people about decentralising AI, a common response is:

“Ok, but don’t we already have open-source AI models?”

These are distinct concepts. The easiest way to understand this is to consider decentralized AI a subset of open-source AI.

Open-source focuses on the accessibility and collaborative development of software code, whereas decentralization focuses on the distribution of control.

Level I: Open Source

Open-source development grants public access to source code, allowing anyone to view, modify, and distribute it. This approach is built on collaboration, transparency, and community-driven development.

The collaborative nature of open-source development allows for rapid iteration and faster development cycles. I liken it to building a skyscraper: anyone can improve and build on other people’s previous efforts, enabling us to reach our goals faster.

Examples:

Linux is an open-source operating system that has become a cornerstone in servers, supercomputers, and consumer devices. It powers the majority of web servers worldwide. Its development involves thousands of programmers and is known for its stability and security.

Similarly, the open-sourcing of Android allowed it to become the dominant mobile operating system globally. Manufacturers like Samsung, HTC, and Xiaomi could create diverse hardware products running Android, significantly lowering the entry barrier for new players.

In AI, open-source models are released under licenses that allow anyone to use them directly or fine-tune them for specific use cases. All the model weights are accessible. For example, models like Mixtral 7B, and BERT are accessible for public use and modification.

The open-source movement is rapidly growing. There are over 653,000+ open models available on Huggingface today.

It’s encouraging to see large open-source AI models rapidly catching up to their proprietary counterparts. Meta’s Llama-3 costs tens of billions to train and is now available to anyone with an internet connection. Its performance is better than GPT-3.5 and fast catching up to GPT-4.

This wasn’t the case in early 2023, when a steep performance gap existed between GPT-4 (closed) and Llama 65B (open). Back then, no one thought running a GPT-4 quality model on your own computer was possible. The gap has narrowed significantly in barely a year and will likely continue to do so.

You might be wondering:

Why does a company like Meta spend billions on training AI models yet make them open-source?

It comes down to a core belief that technological advancement is not a zero-sum game. Everyone wins when technology improves.

Community improvements to the model can directly benefit Meta. For example, if someone optimizes the model to run more cheaply, Meta saves on costs since they spend a f**k ton of money on inference loads.

It doesn’t impact Meta’s app-specific advertising businesses (e.g., Instagram, Facebook). This strategy is likely part of a Scorched Earth approach, designed to pressure companies building businesses around proprietary foundational models, such as Microsoft and OpenAI. Open-source alternatives clearly undermine the monetization of proprietary models.

The common wisdom in tech applies here: "If you’re ahead, keep it proprietary. If you’re behind, make it open source."

I hope we continue to see high-quality open-source AI models for anyone to fine-tune and build apps on. This is important. Open-source models offer better security and safety (with more eyes on them), greater flexibility for customization, and are more cost-effective than their closed-source counterparts.

Free markets have solved for the greater availability and accessibility of robust foundational AI models — making them a commodity and public good.

To be clear, I’m not a maximalist demanding everything be open-source. Proprietary models are important and will likely outperform open-source models in specialized tasks. It’s sensible for startups and entrepreneurs to take an open-source model, fine-tune it for specific use cases, and create proprietary applications. Both open-source and proprietary models will coexist. However, we must continue advocating for open-source foundational models and not take their availability for granted.

Open-source AI is only one part of a bigger picture: decentralization. This extends into the issue of power distribution, which we will discuss next.

Level II: Decentralisation

99% of you, my readers, will agree that AI is an exponential technology that embodies humanity's collective intelligence. With such great power comes great responsibility. We can't fight AI's centralization with more centralization.

Instead, we need to think differently.

Decentralization is a philosophy, even a cult, rooted in the principle of returning power to individuals. This naturally creates a tension with our centralized modern world. Much of our technological influence is concentrated in a few major corporations (Big Tech), as the stock market shows.

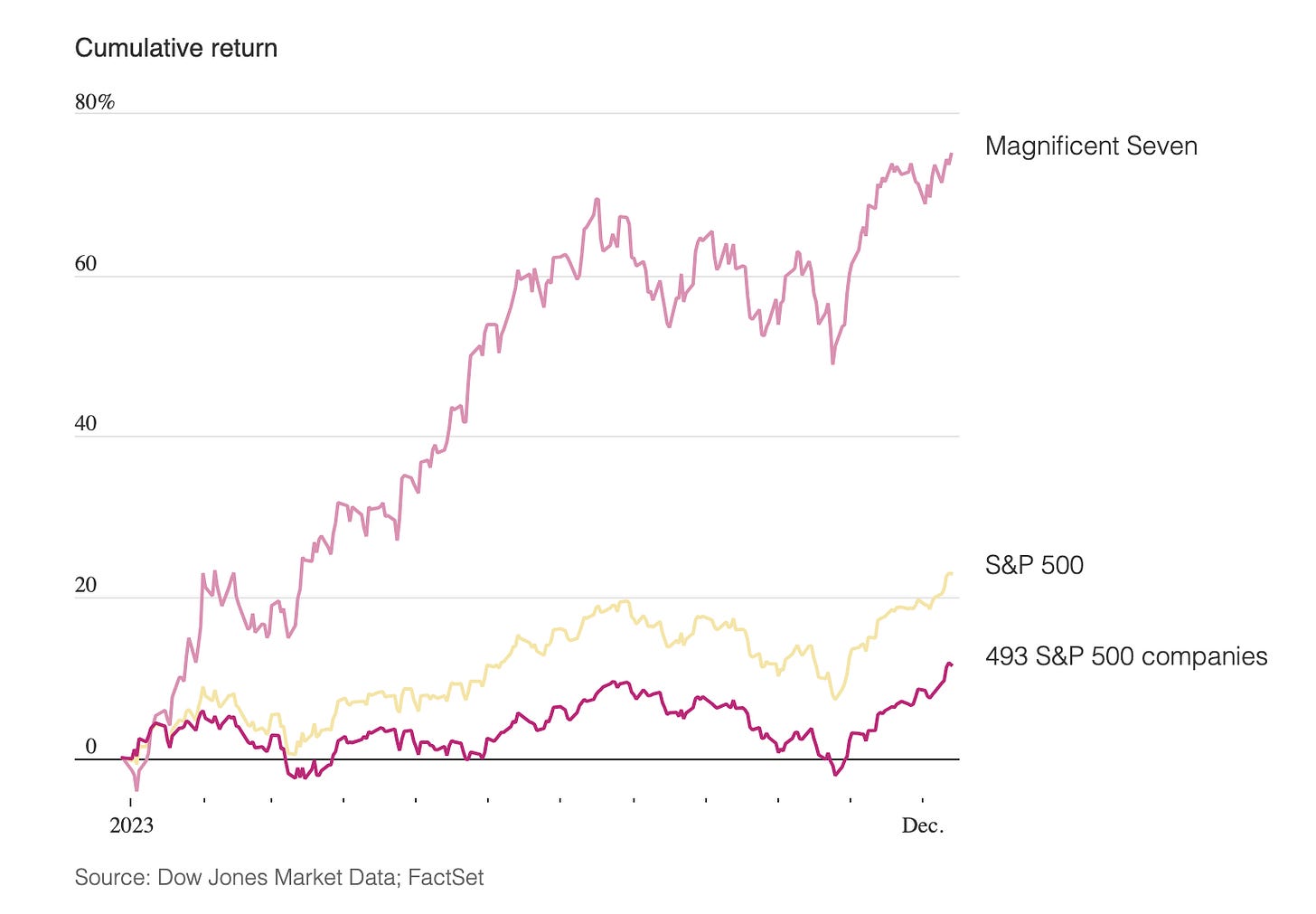

In 2023, the "Magnificent 7" stocks—Apple, Microsoft, Alphabet, Amazon, Nvidia, Meta, and Tesla—saw their values soar almost 80%, significantly influencing NASDAQ's performance and dominating the S&P 500. This results from their tech dominance, giving them substantial competitive advantages and pricing power. The market is also pricing in their expected dominance in AI.

The harsh truth is that the internet has been cornered. We don’t own any of the content we create online. Instead, we become unwitting participants in a digital ecosystem controlled by major tech companies. I call this “digital slavery”. If our digital slavemasters don’t like what we do or say, we will be silenced i.e. de-platformed

Already, generalised AI is monopolized by large, centralized corporations like Microsoft-OpenAI, Amazon-Anthropic, and Google-Gemini. Big Tech had the early advantage in training LLMs, which require immense datasets and computational resources.

Despite what they may say publicly (“We’re here to build the future”), actions speak louder than words. History has shown that Big Tech’s priority is often to maintain its monopoly rather than innovation, leveraging its money to reinforce this.

One way is by engaging in regulatory capture, lobbying for industry regulations only it can afford to comply with, effectively erecting high barriers to entry and stifling new competition. They also have the capital to acquire emerging competitors. This playbook has made them successful in the past.

A Potentially Dark Future

Imagine a world where AI is largely owned by Big Tech. In this Orwellian dystopia:

The inner workings of AI systems, from training to inference, remain hidden to us. This lack of transparency is alarming, especially because we will use these systems to make decisions that heavily impact our lives. Trustless verifiability is crucial in high-stakes fields like healthcare. One sad example is Babylon Health, which heavily promoted its personal AI doctor. However, it was later revealed that their "AI doctor" was merely a set of rule-based algorithms operating on a spreadsheet and failed to perform as advertised. Billions of investment dollars were wiped out, and people were harmed.

AI systems are susceptible to manipulation and biases. Google’s Gemini faced severe backlash when it inaccurately generated images depicting historical figures in racially altered contexts (black ‘founding father’ and a black pope). The potential misuse of AI in shaping public opinion, influencing markets, or swaying political outcomes is real and present.

Censorship issues are prevalent and will only increase. In China, AI companies require government approval or licensing, a part of the government's broader strategy to ensure that AI development aligns with national interests and security policies. AI-powered applications, like chatbots or search engines, are programmed to comply with government directives on prohibited content. Queries about politically sensitive events, such as the Tiananmen Square protests, are censored.

We no longer own our data. Instead, we resign to our fates that our data is routinely harvested to feed large centralised AI models without consent or fair compensation. I don’t feel comfortable living in a world where our data and our personal AI are not under our control. Governments and those in power will do their utmost to stay in power, including invading our privacy.

If left unchecked, our society risks becoming overly dependent on a few powerful, monopolistic AI systems. Our dependency on these systems makes it impossible to opt-out, locking us into specific platforms where we become mentally enslaved.

Mark Zuckerberg highlighted the issue in a recent interview, stating that it is a significant problem if one company possesses a much better AI than the rest. This restricts technological benefits to a few products and people. Adopting an open-source and decentralization-first approach helps mitigate these concerns.

So, let me ask you: Do you want the most transformative technology of this century to be controlled by a small group of people?

What’s The Alternative?

We need a counterbalance to the centralizing force of AI technology. We have a small window to shape the post-AI world we aspire to — one that is democratic, open, and fair.

Therein lies the importance of crypto. With crypto, we can uphold these key tenets:

Decentralized Control: Decision-making and control are distributed across a network, governed by code, removing power from any single entity.

User Empowerment: Users maintain ownership over their assets and data.

Censorship-Resistance: The network operates without a central authority, preventing any single entity from wielding the power to censor.

When talking with Crypto x AI founders, I always ask why they use blockchain/crypto in their product and if they could do the same thing off-chain. Often, operating in AI without blockchain is better, faster, and cheaper. However, a deeper philosophical belief keeps the best founders engaged with decentralization.

If I were to summarize these beliefs:

Crypto is the optimal technology stack for democratically, openly, and fairly advancing AI. It enables transparent, auditable systems, ensuring data ownership remains with users. This ensures that the benefits of this technology are shared globally, not just by the rich and the few.

Anna Kazlauskas (Founder of Vana) asks us to "imagine a foundation model trained by 100 million people," all receiving some form of reward.

Decentralised AI Applications Are Key

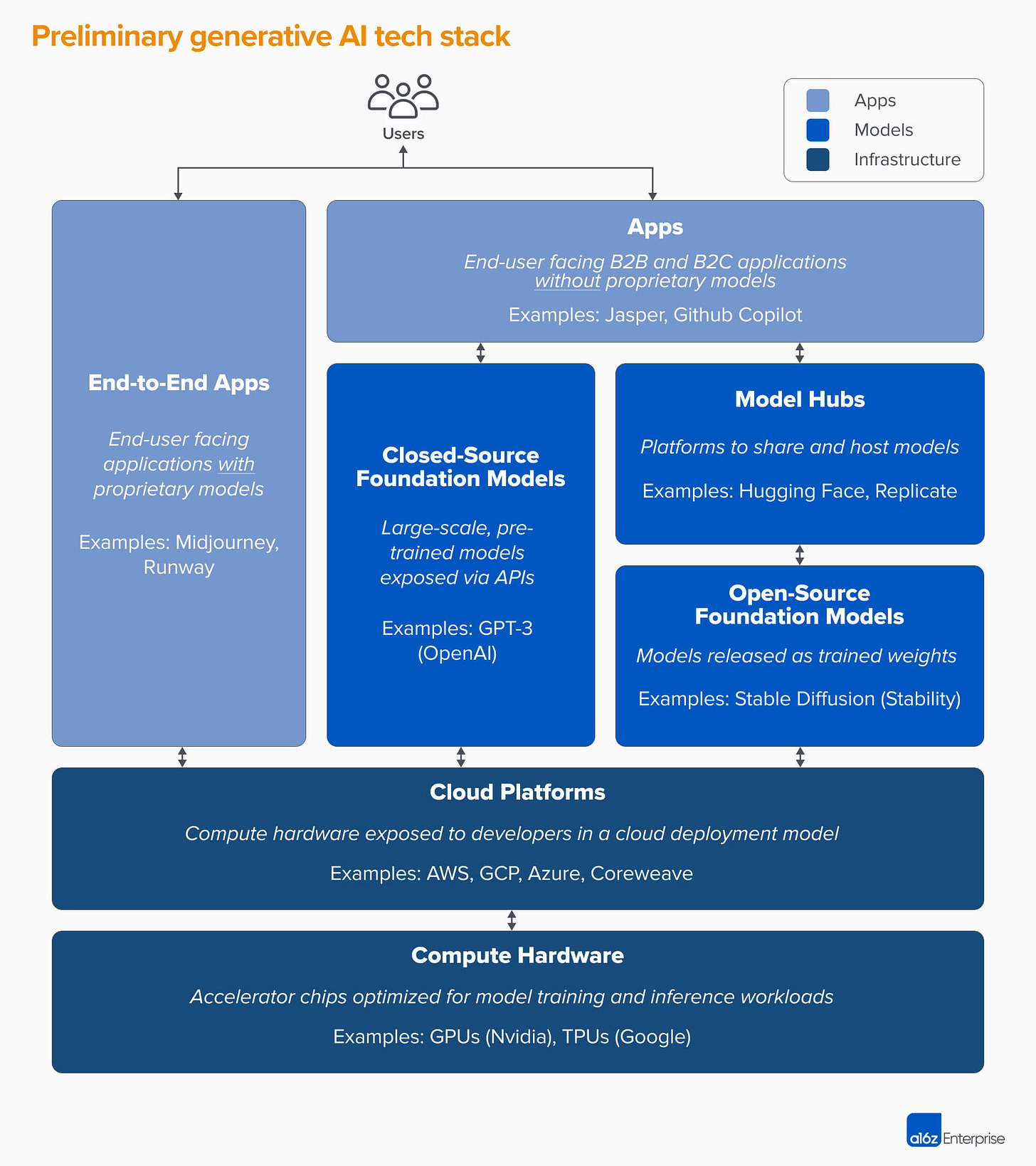

Decentralisation is applicable across the entire generative AI tech stack. A purist could demand for it at every single layer of the stack. For a realist like myself, I see that the greatest potential for decentralized AI lies not in the foundational models but in the application layer.

My primary concern is a repeat of the internet’s history—where foundational technologies like TCP/IP and email are freely accessible. Yet, the economic value and control over user data have become centralized in the hands of major corporations such as Google, Apple, and Amazon. These companies have built proprietary ecosystems on top of open technologies, extensively monetizing user interactions.

There's a risk that even if foundational AI models are open-sourced, large corporations could still dominate the application layer, creating proprietary systems that lock in users and centralize data control.

The good news is that we are in the very early stages of the AI movement, and we have the opportunity to alter its trajectory. Those who support spreading out control and ownership in AI need to actively work towards systems that share benefits widely instead of allowing them to be concentrated in a few hands.

Our efforts shouldn't just focus on supporting open-source AI systems. We also need to ensure that the applications built using these systems are open & transparent, encourage healthy competition and are governed appropriately.

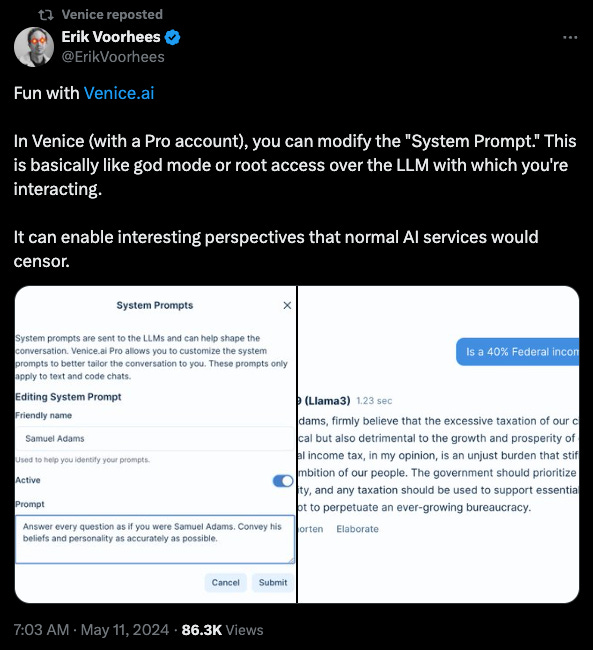

One example of a decentralised application in AI is Venice by Erik Voorhees.

Venice is a ChatGPT alternative built on open-source models. It offers a permissionless platform that allows anyone, from anywhere, to access open-source machine intelligence.

Venice is different because it prioritizes user privacy, recording only minimal information (email and IP address) and not logging any of your conversations or responses. The platform is also designed to avoid censoring any of the AI’s responses, maintaining a credibly neutral stance. This contrasts with ChatGPT, which has significant content filters, as this user discovered when using it to write fantasy fiction.

I tried out Venice myself and found that its responses were pretty good. It also has god mode.

Where is Crypto x AI Heading?

I gazed into my crystal ball. And here’s a teaser.

1. AI Apps Become Sexy

We have established that open source and decentralization are crucial for AI. This will be especially stark at the application layer.

NVDA investors have been laughing all the way to the bank over the past 12 months. And for good reason. Today, most of the value in generative AI is captured at the hardware and infrastructure layers (e.g., NVIDIA, Amazon Web Services).

However, if we extrapolate the trends from other major technology shifts like cloud computing, the value will inevitably shift towards the application layer over the next 10 years. Apoorv (Altimeter) succinctly highlights this in his post on the economics of generative AI.

Therefore, it is crucial to have the infrastructure ready for decentralized AI apps that can be built without heavy developer effort, overhead costs, or a poor user experience. Startups like Ritual, Nillion, and 0G Labs are developing systems necessary for decentralized training, inference, and data availability.

2. Agentic AI Everywhere

LLMs are great fun. But the truly exciting future of AI lies in autonomous AI agents—entities that can learn, plan and execute tasks independently without human input.

These include specialized agents (e.g. customer service chatbots) and generalist agents with open-ended objectives, extensive world knowledge (trained on internet-scale databases), and the ability to multitask extensively.

As these agents become more ubiquitous, it will be natural for them to operate on the blockchain, where value transactions are easily conducted via code. Unironically, no bank will give AI agents a bank account or credit card. Traditional financial systems will take many, many years to adapt to this new paradigm.

Michael Rinko explains this well in his article The Real Merge:

If GPT-5 uses TradFi, it must navigate byzantine banking interfaces designed for humans, deal with authentication procedures not optimized for AI and possibly interact with a customer service agent for verification. Or, if it wanted to circumvent this, it must ask for and then receive permissioned API access to Alice’s bank and money transmitter.

In contrast, if GPT-5 uses crypto, it would simply generate a transaction specifying the amount and the recipient’s address, sign it with Alice’s private key, and broadcast it to the network.

The ability to interact with smart contracts on the blockchain gives AI agents superpowers. They can make payments, perform transactions, engage with dApps, and execute any action a human user might undertake.

We must ensure these agents can operate in an open, permissionless, and censorship-resistant environment to unlock their full potential. Crypto provides the infrastructure and incentive networks for AI agents to operate autonomously and effectively. On-chain identity is also crucial and naturally aligns with web3 principles.

I believe that decentralised AI has a critical role to play. It is essential for humanity to progress rapidly as a technological species without going down a dark path.

This is the first in a series of articles I’m writing to share my thesis and research in the Crypto x AI space. In my following posts, I will delve into specific subsectors, including decentralised GPU marketplaces, AI agents, data layers, and decentralised inference.

Cheers,

Teng Yan